Making a piano keyboard

In this section, we will use some of the core Audio API interfaces to create a simple piano keyboard. We will learn what an AudioParam is and how to use it to change the pitch of the sound.

Base application

Like in the previous example, we will start with a simple app with a couple of buttons so we don't need to worry about the UI later. You can just copy and paste the code below to your project.

import React from 'react';

import { View, Text, Pressable } from 'react-native';

type KeyName = 'A' | 'B' | 'C' | 'D' | 'E';

interface ButtonProps {

keyName: KeyName;

onPressIn: (key: KeyName) => void;

onPressOut: (key: KeyName) => void;

}

const Button = ({ onPressIn, onPressOut, keyName }: ButtonProps) => (

<Pressable

onPressIn={() => onPressIn(keyName)}

onPressOut={() => onPressOut(keyName)}

style={({ pressed }) => ({

margin: 4,

padding: 12,

borderRadius: 2,

backgroundColor: pressed ? '#d2e6ff' : '#abcdef',

})}

>

<Text style={{ color: 'white' }}>{`${keyName}`}</Text>

</Pressable>

);

export default function SimplePiano() {

const onKeyPressIn = (which: KeyName) => {};

const onKeyPressOut = (which: KeyName) => {};

return (

<View

style={{

flex: 1,

justifyContent: 'center',

alignItems: 'center',

flexDirection: 'row',

}}

>

{Keys.map((key) => (

<Button

onPressIn={onKeyPressIn}

onPressOut={onKeyPressOut}

keyName={key}

key={key}

/>

))}

</View>

);

}

Create audio context and preload the data

Like previously, we will need to preload the audio files in order to be able to play them. Using the interfaces we already know, we will download them and store in the memory using the good old useRef hook.

First, we have the import section and the list of sources we will be using. Let’s also make things easier by using type shorthand for the partial record:

import { AudioBuffer, AudioContext } from 'react-native-audio-api';

/* ... */

type PR<T> = Partial<Record<KeyName, T>>;

const sourceList: PR<string> = {

A: 'https://software-mansion.github.io/react-native-audio-api/audio/sounds/C4.mp3',

C: 'https://software-mansion.github.io/react-native-audio-api/audio/sounds/Ds4.mp3',

E: 'https://software-mansion.github.io/react-native-audio-api/audio/sounds/Fs4.mp3',

};

Then, we will want to fetch the audio files and store them. We want the audio data to be available to play as soon as possible, so we will use the useEffect hook to download them and store them in the useRef hook for simplicity.

export default function SimplePiano() {

const audioContextRef = useRef<AudioContext | null>(null);

const bufferMapRef = useRef<PR<AudioBuffer>>({});

useEffect(() => {

if (!audioContextRef.current) {

audioContextRef.current = new AudioContext();

}

Object.entries(sourceList).forEach(async ([key, url]) => {

bufferListRef.current[key as KeyName] = await fetch(url)

.then((response) => response.arrayBuffer())

.then((arrayBuffer) =>

audioContextRef.current!.decodeAudioData(arrayBuffer)

);

});

}, []);

}

Playing the sounds

Now it is finally time to play the sounds. We will use the AudioBufferSourceNode and simply play the buffers.

export default function SimplePiano() {

const onKeyPressIn = (which: KeyName) => {

const audioContext = audioContextRef.current;

const buffer = bufferMapRef.current[which];

if (!audioContext || !buffer) {

return;

}

const source = new AudioBufferSourceNode(audioContext, {

buffer,

});

source.connect(audioContext.destination);

source.start();

};

}

When we put everything together, we will get something like this:

Great! But there are a few things off here:

- We are not stopping the sound when the button is released, which is how a piano should work, right? 🙃

- You have probably noticed in the previous section, but we are missing sounds for keys 'B' and 'D'.

Let’s see how we can address these issues using the Audio API. We will go through them one by one. Ready?

Key release

To stop the sound when keys are released, we will need to store somewhere source nodes, in order to be able to call stop on them later. Just like with the audio context, let's use the useRef hook for this.

const playingNotesRef = useRef<PR<AudioBufferSourceNode>>({});

Now we need to modify the onKeyPressIn function a bit

const onKeyPressIn = (which: KeyName) => {

const audioContext = audioContextRef.current!;

const buffer = bufferMapRef.current[which];

const source = new AudioBufferSourceNode(audioContext, {

buffer,

});

source.connect(audioContext.destination);

source.start();

playingNotesRef.current[which] = source;

};

And finally, we can implement the onKeyPressOut function

const onKeyPressOut = (which: KeyName) => {

const source = playingNotesRef.current[which];

if (source) {

source.stop();

}

};

Putting it all together again, we get:

And they stop on release, just as we wanted. But if we hold the keys for a short time, it sounds a bit strange. Also, have you noticed that the sound is simply cut off when we release the key? 🤔 It leaves a bit of an unpleasant feeling, right? So let’s try to make it a bit smoother.

Envelopes ✉️

We will start from the end this time, and finally, we will use new type of audio node - GainNode 🎉

GainNode is a simple node that can change the volume of any node (or nodes) connected to it. The GainNode has a single element called AudioParam , which is also named gain..., a bit lame, but we have to live with that.

What is an AudioParam?

An AudioParam is an interface that controls various aspects of most audio nodes, like volume (in the GainNode described above), pan or frequency. It allows us to control these aspects over time, enabling smooth transitions and complex audio effects.

For our use case, we are interested in two methods of an AudioParam:

So, how can we use that for our piano?

The title of one of the previous sections might ring a bell 🔔.

Please read ADSR and envelope section to become familiar with common sound manipulation techniques.

Implementing the envelope

With all the knowledge we have gathered, let's get back to the code. In our onKeyPressIn function, besides creating the source node, we will create a GainNode which will stand in the middle between the source and destination nodes, acting as our envelope.

We want to implement the attack in onKeyPressIn function, and release in onKeyPressOut. In order to be able to access the envelope in both functions we will have to store it somewhere, so let's modify the playingNotesRef introduced earlier.

Also, let’s not forget about the issue with short key presses. We will address that by enforcing a minimal duration of the sound to one second (as it works nicely with the samples we have 😉).

Let’s start with the types:

interface PlayingNote {

source: AudioBufferSourceNode;

envelope: GainNode;

startedAt: number;

}

and the useRef hook:

const playingNotesRef = useRef<PR<PlayingNote>>({});

Now we can modify the onKeyPressIn function:

const onKeyPressIn = (which: KeyName) => {

const audioContext = audioContextRef.current!;

const buffer = bufferMapRef.current[which];

const tNow = audioContext.currentTime;

if (!audioContext || !buffer) {

return;

}

const source = audioContext.createBufferSource();

source.buffer = buffer;

const envelope = audioContext.createGain();

source.connect(envelope);

envelope.connect(audioContext.destination);

envelope.gain.setValueAtTime(0.001, tNow);

envelope.gain.exponentialRampToValueAtTime(1, tNow + 0.1);

source.start(tNow);

playingNotesRef.current[which] = { source, envelope, startedAt: tNow };

};

and the onKeyPressOut function:

const onKeyPressOut = (which: KeyName) => {

const audioContext = audioContextRef.current!;

const playingNote = playingNotesRef.current[which];

if (!playingNote || !audioContext) {

return;

}

const { source, envelope, startedAt } = playingNote;

const tStop = Math.max(audioContext.currentTime, startedAt + 5);

envelope.gain.exponentialRampToValueAtTime(0.0001, tStop + 0.08);

envelope.gain.setValueAtTime(0, tStop + 0.09);

source.stop(tStop + 0.1);

playingNotesRef.current[which] = undefined;

};

As a result, we can hear something like this:

And it finally sounds smooth and nice. But what about decay and sustain phases? Both are handled by the audio samples themselves, so we do not need to worry about them. To be honest, same goes for the attack phase, but we have implemented it for the sake of this guide. 🙂

So, the only piece left is addressing the missing sample files for the 'B' and 'D' keys. What can we do about that?

Tampering with the playback rate

The AudioBufferSourceNode also has its own AudioParam, called playbackRate as the title suggests. It allows us to change the speed of the playback of the audio buffer.

Yay! Nice. But how can we use that to make the missing keys sound? I will keep this short, as this guide is already quite long, so let’s wrap up!

When we change the speed of a sound, it will also change its pitch (frequency). So, we can use that to make the missing keys sound.

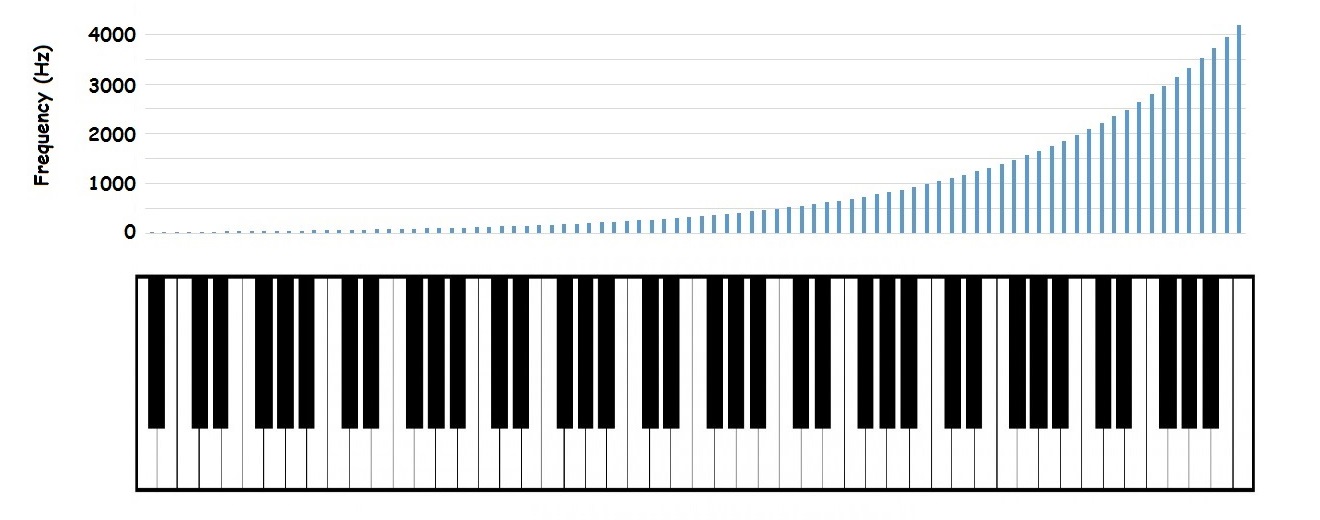

Each piano key has its own dominant frequency (e.g., the frequency of the A4 key is 440Hz). We can check the frequency of each key, calculate the ratio between them, and use that ratio to adjust the playback rate of the buffers we have.

For our example, let's use these frequencies as the base for our calculations:

const noteToFrequency = {

A: 261.626, // real piano middle C

B: 277.193, // Db

C: 311.127, // Eb

D: 329.628, // E

E: 369.994, // Gb

};

First, we need to find the closest key to the missing one. We can do this in simple for loop:

function getClosest(key: KeyName) {

let closestKey = 'A';

let minDiff = noteToFrequency.A - noteToFrequency[key];

for (const sourcedKey of Object.keys(sourceList)) {

const diff = noteToFrequency[sourcedKey] - noteToFrequency[key];

if (Math.abs(diff) < Math.abs(minDiff)) {

minDiff = diff;

closestKey = sourcedKey;

}

}

return closestKey;

}

Now, we simply use the function in onKeyPressIn when the buffer is not found and adjust the playback rate for the source node accordingly:

const onKeyPressIn = (which: KeyName) => {

let buffer = bufferListRef.current[which];

const aCtx = audioContextRef.current;

let playbackRate = 1;

if (!buffer) {

const closestKey = getClosest(which);

const closestBuffer = bufferMapRef.current[closestKey];

playbackRate = noteToFrequency[closestKey] / noteToFrequency[which];

}

const source = aCtx.createBufferSource();

const envelope = aCtx.createGain();

source.buffer = buffer;

};

Final effects

As before, you can see the final results in the live example below, along with the full source code.

Summary

In this guide, we have learned how to create a simple piano keyboard with the help of the GainNode and AudioParams. To sum up:

AudioParamis an interface that provides ways to control various aspects of audio nodes over time.GainNodeis a simple node that can change the volume of any node connected to it.AudioBufferSourceNodehas a parameter calledplaybackRatethat allows you to change the speed of the audio buffer's playback, thereby altering the pitch of the sound.- We can use

GainNodeto create envelopes, making the sound transitions smoother and more pleasant. - We have learned how to use the Audio API in the React environment, simulating a more production-like scenario.

What's next?

In the next section, we will learn how we can generate noise using the audio buffer source node.