BaseAudioContext

The BaseAudioContext interface acts as a supervisor of audio-processing graphs. It provides key processing parameters such as current time, output destination or sample rate.

Additionally, it is responsible for nodes creation and audio-processing graph's lifecycle management.

However, BaseAudioContext itself cannot be directly utilized, instead its functionalities must be accessed through one of its derived interfaces: AudioContext, OfflineAudioContext.

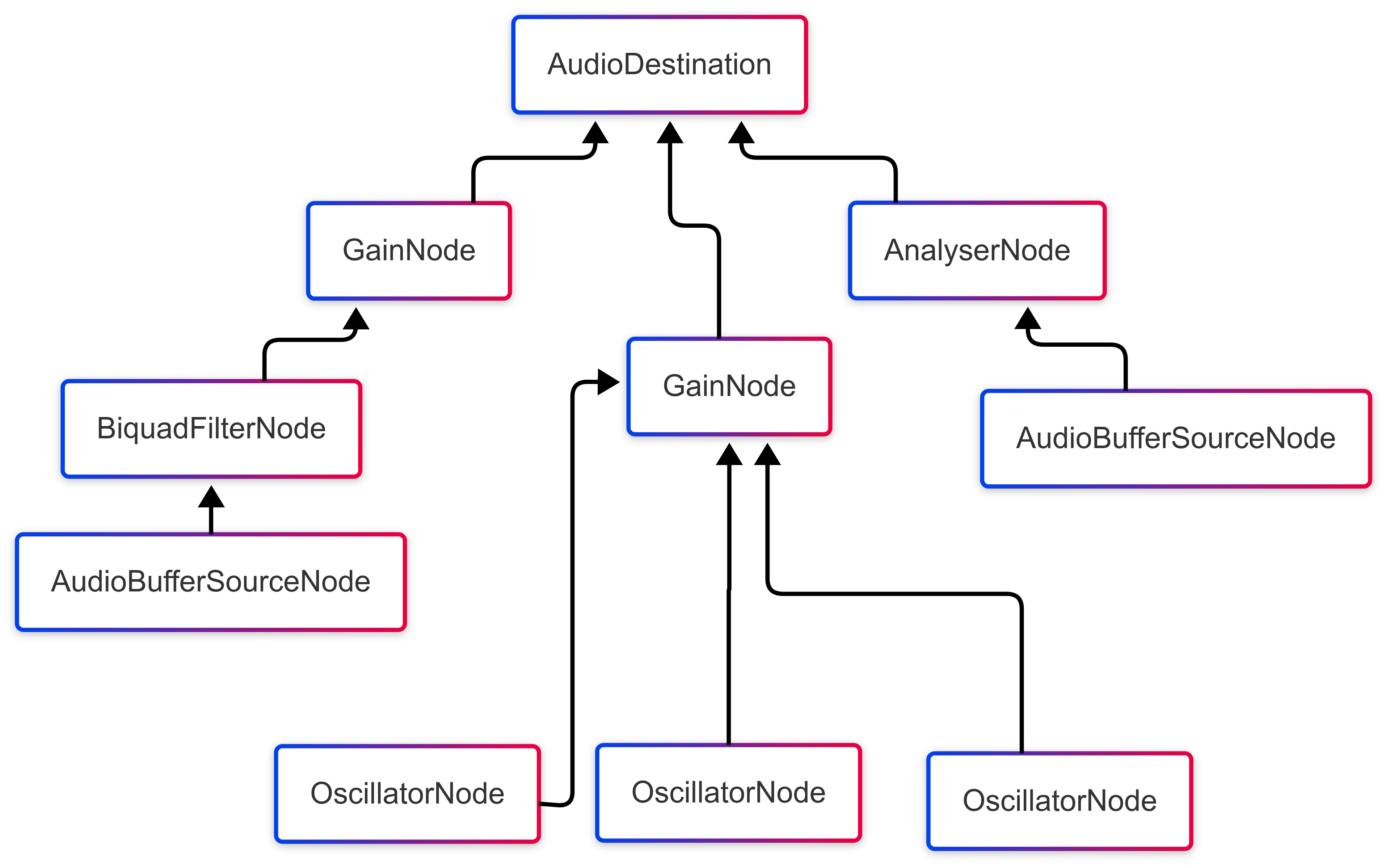

Audio graph

An audio graph is a structured representation of audio processing elements and their connections within an audio context. The graph consists of various types of nodes, each performing specific audio operations, connected in a network that defines the audio signal flow. In general we can distinguish four types of nodes:

- Source nodes (e.g

AudioBufferSourceNode,OscillatorNode) - Effect nodes (e.g

GainNode,BiquadFilterNode) - Analysis nodes (e.g

AnalyserNode) - Destination nodes (e.g

AudioDestinationNode)

Rendering audio graph

Audio graph rendering is done in blocks of sample-frames. The number of sample-frames in a block is called render quantum size, and the block itself is called a render quantum. By default render quantum size value is 128 and it is constant.

The AudioContext rendering thread is driven by a system-level audio callback.

Each call has a system-level audio callback buffer size, which is a varying number of sample-frames that needs to be computed on time before the next system-level audio callback arrives,

but render quantum size does not have to be a divisor of the system-level audio callback buffer size.

Concept of system-level audio callback does not apply to OfflineAudioContext.

Properties

| Name | Type | Description | |

|---|---|---|---|

currentTime | number | Double value representing an ever-increasing hardware time in seconds, starting from 0. | Read only |

destination | AudioDestinationNode | Final output destination associated with the context. | Read only |

sampleRate | number | Float value representing the sample rate (in samples per seconds) used by all nodes in this context. | Read only |

state | ContextState | Enumerated value represents the current state of the context. | Read only |

Methods

createAnalyser

Creates AnalyserNode.

Returns AnalyserNode.

createBiquadFilter

Creates BiquadFilterNode.

Returns BiquadFilterNode.

createBuffer

Creates AudioBuffer.

| Parameter | Type | Description |

|---|---|---|

numOfChannels | number | An integer representing the number of channels of the buffer. |

length | number | An integer representing the length of the buffer in sampleFrames. Two seconds buffer has length equals to 2 * sampleRate. |

sampleRate | number | A float representing the sample rate of the buffer. |

Errors

| Error type | Description |

|---|---|

NotSupportedError | numOfChannels is outside the nominal range [1, 32]. |

NotSupportedError | sampleRate is outside the nominal range [8000, 96000]. |

NotSupportedError | length is less then 1. |

Returns AudioBuffer.

createBufferSource

Creates AudioBufferSourceNode.

| Parameter | Type | Description |

|---|---|---|

options Optional | { pitchCorrection: boolean } | Boolean that specifies if pitch correction has to be available. |

Returns AudioBufferSourceNode.

createBufferQueueSource Mobile only

Creates AudioBufferQueueSourceNode.

| Parameter | Type | Description |

|---|---|---|

options Optional | { pitchCorrection: boolean } | Boolean that specifies if pitch correction has to be available. |

Returns AudioBufferQueueSourceNode.

createConstantSource

Creates ConstantSourceNode.

Returns ConstantSourceNode.

createConvolver

Creates ConvolverNode.

Returns ConvolverNode.

createDelay

Creates DelayNode

| Parameter | Type | Description |

|---|---|---|

maxDelayTime Optional | number | Maximum amount of time to buffer delayed values |

Returns DelayNode

createGain

Creates GainNode.

Returns GainNode.

createIIRFilter

Creates IIRFilterNode.

Returns IIRFilterNode.

createOscillator

Creates OscillatorNode.

Returns OscillatorNode.

createPeriodicWave

Creates PeriodicWave. This waveform specifies a repeating pattern that an OscillatorNode can use to generate its output sound.

| Parameter | Type | Description |

|---|---|---|

real | Float32Array | An array of cosine terms. |

imag | Float32Array | An array of sine terms. |

constraints Optional | PeriodicWaveConstraints | An object that specifies if normalization is disabled. If so, periodic wave will have maximum peak value of 1 and minimum peak value of -1. |

Errors

| Error type | Description |

|---|---|

InvalidAccessError | real and imag arrays do not have same length. |

Returns PeriodicWave.

createRecorderAdapter

Creates RecorderAdapterNode.

Returns RecorderAdapterNode

createStereoPanner

Creates StereoPannerNode.

Returns StereoPannerNode.

createStreamer Mobile only

Creates StreamerNode.

Returns StreamerNode.

createWaveShaper

Creates WaveShaperNode.

Returns WaveShaperNode.

createWorkletNode Mobile only

Creates WorkletNode.

| Parameter | Type | Description |

|---|---|---|

worklet | (Array<Float32Array>, number) => void | The worklet to be executed. |

bufferLength | number | The size of the buffer that will be passed to the worklet on each call. |

inputChannelCount | number | The number of channels that the node expects as input (it will get min(expected, provided)). |

workletRuntime | AudioWorkletRuntime | The kind of runtime to use for the worklet. See worklet runtimes for details. |

Errors

| Error type | Description |

|---|---|

Error | react-native-worklet is not found as dependency. |

NotSupportedError | bufferLength < 1. |

NotSupportedError | inputChannelCount is not in range [1, 32]. |

Returns WorkletNode.

createWorkletSourceNode Mobile only

Creates WorkletSourceNode.

| Parameter | Type | Description |

|---|---|---|

worklet | (Array<Float32Array>, number, number, number) => void | The worklet to be executed. |

workletRuntime | AudioWorkletRuntime | The kind of runtime to use for the worklet. See worklet runtimes for details. |

Errors

| Error type | Description |

|---|---|

Error | react-native-worklet is not found as dependency. |

Returns WorkletSourceNode.

createWorkletProcessingNode Mobile only

Creates WorkletProcessingNode.

| Parameter | Type | Description |

|---|---|---|

worklet | (Array<Float32Array>, Array<Float32Array>, number, number) => void | The worklet to be executed. |

workletRuntime | AudioWorkletRuntime | The kind of runtime to use for the worklet. See worklet runtimes for details. |

Errors

| Error type | Description |

|---|---|

Error | react-native-worklet is not found as dependency. |

Returns WorkletProcessingNode.

decodeAudioData

Decodes audio data from either a file path or an ArrayBuffer. The optional sampleRate parameter lets you resample the decoded audio.

If not provided, the audio will be automatically resampled to match the audio context's sampleRate.

For the list of supported formats visit this page.

| Parameter | Type | Description |

|---|---|---|

input | ArrayBuffer | ArrayBuffer with audio data. |

string | Path to remote or local audio file. | |

number | Asset module id. Mobile only | |

fetchOptionsOptional | RequestInit | Additional headers parameters when passing url to fetch. |

Returns Promise<AudioBuffer>.

Example decoding with memory block

const url = ... // url to an audio

const buffer = await fetch(url)

.then((response) => response.arrayBuffer())

.then((arrayBuffer) => this.audioContext.decodeAudioData(arrayBuffer))

.catch((error) => {

console.error('Error decoding audio data source:', error);

return null;

});

Example using expo-asset library

import { Asset } from 'expo-asset';

const buffer = await Asset.fromModule(require('@/assets/music/example.mp3'))

.downloadAsync()

.then((asset) => {

if (!asset.localUri) {

throw new Error('Failed to load audio asset');

}

return this.audioContext.decodeAudioData(asset.localUri);

})

decodePCMInBase64

Decodes base64-encoded PCM audio data.

| Parameter | Type | Description |

|---|---|---|

base64String | string | Base64-encoded PCM audio data. |

inputSampleRate | number | Sample rate of the input PCM data. |

inputChannelCount | number | Number of channels in the input PCM data. |

isInterleaved Optional | boolean | Whether the PCM data is interleaved. Default is true. |

Returns Promise<AudioBuffer>

Example decoding with data in base64 format

const data = ... // data encoded in base64 string

// data is not interleaved (Channel1, Channel1, ..., Channel2, Channel2, ...)

const buffer = await this.audioContext.decodeAudioData(data, 4800, 2, false);

Remarks

currentTime

- Timer starts when context is created, stops when context is suspended.

ContextState

Details

Acceptable values:

suspended

The audio context has been suspended (with one of suspend or OfflineAudioContext.suspend).

running

The audio context is running normally.

closed

The audio context has been closed (with close method).